In recent years, something has shifted in hobbyist computing. This could be pinpointed as something that started to happen with the Arduino, or the Raspberry Pi, or the MiSTer, or other popular "hackable" devices, but independent hardware has had a resurgence as a major background force to computing as a whole. While they don't boast the enormous numbers of a manufacturing giant like Apple or Samsung, these projects have always had a certain role in the computing landscape, from the very beginning, and their popularity is evident: Raspberry Pi can claim to have sold 61 million units as of February 2024.

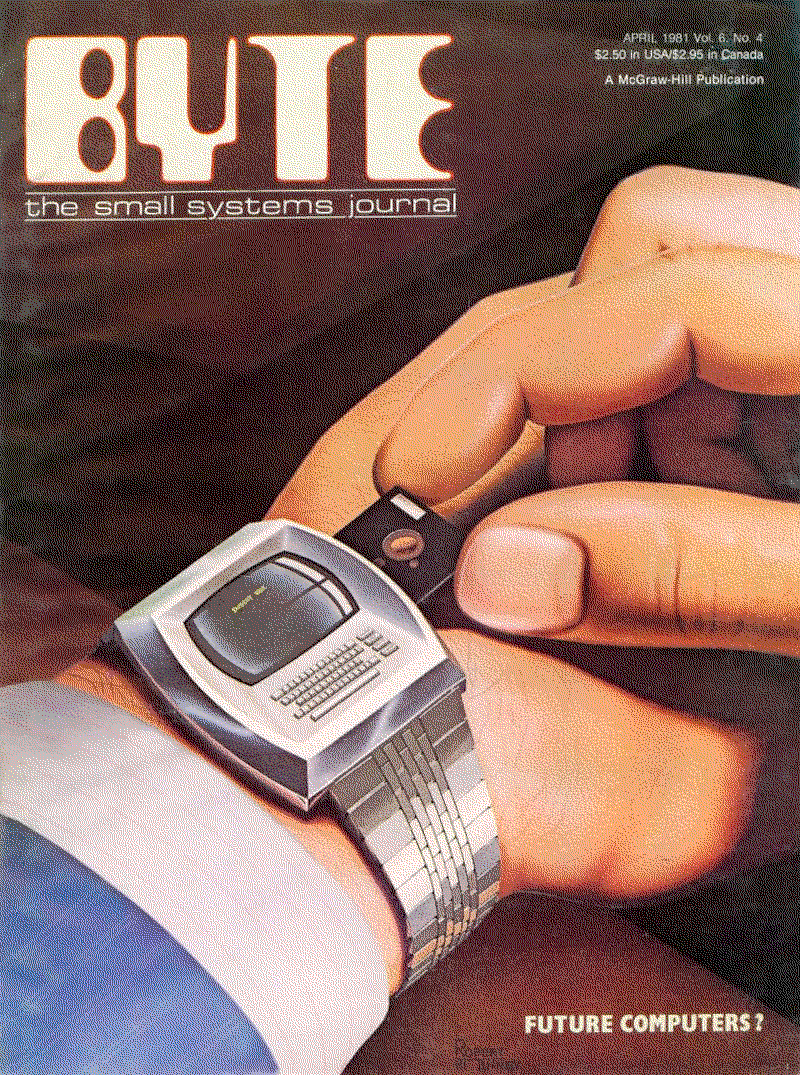

Some historical perspective is needed. In the 1970's, the computing market was disrupting and displacing prior

technologies through generational

improvements to semiconductor technology: because chip fabrication processes made each generation of chips smaller,

the market looked for opportunities to sell either "more power" — big chip designs with more compute capacity — or

"more volume" — putting some computing into every possible gadget. In a very short time,

electronic calculators, alarm clocks, watches, VCRs and other devices had flooded onto the market,

displacing their manual or mechanical counterparts or creating new consumer electronics categories.

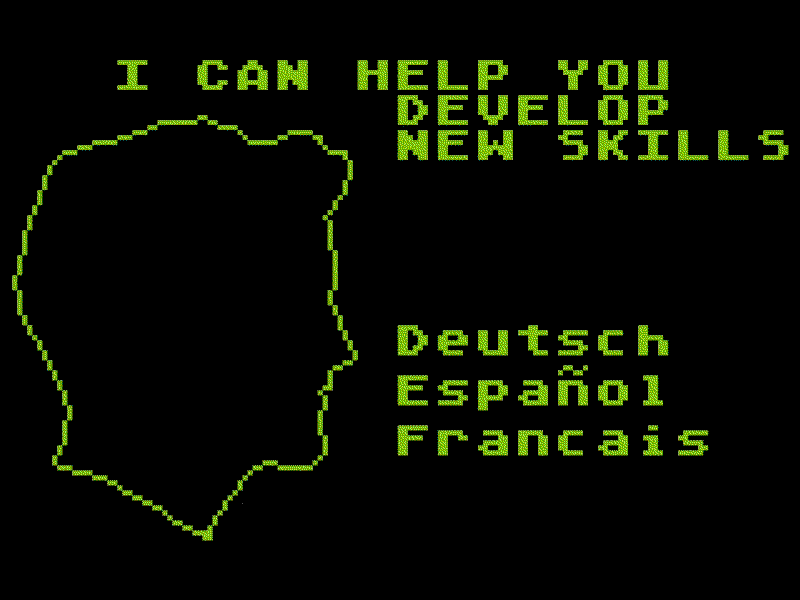

img-atari-instore-demo.png

The Atari 800 in-store demo, 1980: "I CAN HELP YOU DEVELOP NEW SKILLS" - Deutsch, Español, Francias

The microcomputer was perhaps the most hyped of all of these devices: the nebulous promise was that a computer could make you a more effective and informed individual. In practice, while these early machines did find a place in the office, and the 1980's saw many record-keeping and secretarial roles folded into "computerization", what the younger generation remembers of them can be summed up with William Shatner's pitch for the Vic-20: "plays great games, too."

These computers were limited in part because there was no established ecosystem of parts or standards. Manufacturers cribbed ideas from any source they could find to make keyboards, connectors, storage devices, etc. They made delicate tradeoffs between ease of programming, features, and cost. They were mostly incompatible, and the available I/O, memory and storage were so limited that it really did feel like all they could do was games and printing a few things to paper.

On top of that, it was very hard to get information: you had to collect magazines and contact user groups and make phone calls and send letters in hopes of getting some documentation. If you tried to go online, your download speeds were very slow and going outside the local area incurred heavy long-distance fees. If you tried to experiment on your own you were often stuck crashing the system and retyping a lot of work from the beginning. A lot of knowledge was siloed in universities where the research was being done, and in a few companies that hired their talent pool from university programs or out of the defense sector. Computing devices sold for specialized applications in the 1980's were black boxes costing tens of thousands of dollars, often because that was just how much it cost to get hardware useful for that task.

What happened by the middle of the 1980's was a shift away from hobbyists towards businesses, led by the IBM PC standard, which was usurped by Microsoft a few years later. Microsoft saw an opportunity to unify PC hardware around their software platform. This unification satisfied some real needs, because it meant that hardware makers could be less involved in software and instead ship one piece of driver code that talked to Microsoft's interface, while software developers could talk to that interface and not worry about what hardware was using it. It meant that a larger market for application software could exist, as well: Companies like Autodesk and Adobe increasingly started grabbing professional users in this period, giving them a computerized version of familiar tools for industrial design, architecture, graphics, etc.

The change from hardware interfaces to software interfaces required a narrowing of purpose. Microsoft had to imagine the entire world of what computers could do, and provide an interface to that. While it wasn't solely Microsoft's doing, since they collected their information from hardware makers, the goal they had in mind was to build computing in a direction that they could hold monopoly power over. This is accomplished, as we now know well, by making the computer an appealing distraction that locks in customers and harvests their information in order to sell it. Thus, the PC simultaneously became:

- More powerful in specification

- Cheaper to afford

- More appealing for consumer applications

- Disruptive to various professions

- Harder to understand and troubleshoot

- More heavily professionalized to developers

- Higher value and more vulnerable to intrusion

The negative effects were, for a long while, counterbalanced by something you and I have experienced: they did, in fact, put more information at our fingertips, and at increasingly lower prices. But notice that they did this in a specific way: The things that the computers are better at now are legible tasks like "edit a video" or "purchase a subscription" — legible in terms of activities that a bureacuracy can track. Once you try to probe anything about how it does its computation, it's a complete nightmare and no human being can hope to comprehend more than a small slice of it. Our approach to verifying that a piece of software is doing its job often boils down to "it looks pretty and is easy to use."

img-o-superman.png

"O Superman", Laurie Anderson's 1981 experimental song conveying the force of the late 20th Century United States as a mother's embrace of "petrochemical, military, electronic arms".

Critics of computing often point to its use as an "imperial control tool" - a way of furthering bureaucratic aims by collecting and processing data.

The problem is that as we go further and further down this direction, information stops improving. The industry is still adding more data to the pile, but data is not information. Information has to have some value, and a lot of the data being made can be filed under that one great misuse of computing: "wrong answers produced infinitely fast". It doesn't matter which particular trending buzzword we point to — the industry is uniformly guilty of this. Thus, we've returned to a certain fear that was common before the microcomputer era: how can you trust a computer? We're ignorant, and it has a lot of power over our lives, so of course we fear it.

This has motivated an undercurrent of interest in all things "retro" - retro games, retro hardware, retro software. At first it might have been dismissed as nostalgia for a simpler time, but it's gained a sort of urgency as the computing ecosystem we've built increasingly looks dangerous and designed to harm us. The software ecosystem can't fix the problem on its own — it needs to have hardware that is made to be hacked on in a deprofessionalized sense, by ordinary people, if it's truly going to serve everyone. Interest in microcomputing correlates with a desire to reassert user control. We have to reimagine computing to see the alternatives to an IBM or Microsoft-style platform. Further, we have to explore the things that we've left untried or abandoned in prototype form. I am confident that there is wisdom in old techniques that we rushed past before.

Fortunately, the hardware landscape has become much more mature than it was at the dawn of the microprocessor.

Memory and storage are now plentiful for any kind of "personal" application, and there are many companies selling

boards and chips to hobbyists. Conventional protocols for I/O exist, and are well-documented, with plenty of examples.

Everyone who has a hardware project can share it to the whole world instantly through a video or blog post.

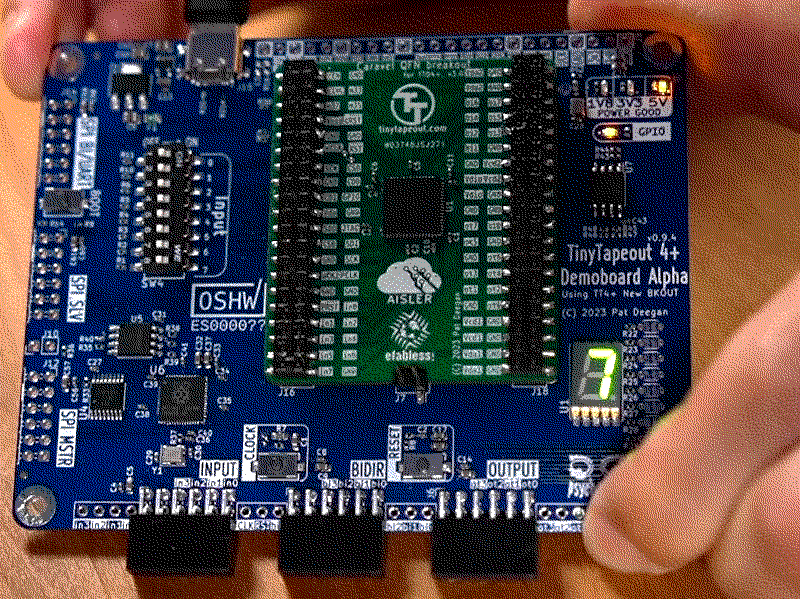

img-tiny-tapeout.png

Tiny Tapeout 4+ development board - an ASIC launched in July 2023, containing 143 projects from over 30 countries.

Let's take a moment to marvel at how far the computing hobby has come since the early years:

- Cheap, powerful single-board computers

- Online shopping for unusual parts

- Standardized I/O ports and protocols

- Custom PCB design

- 3D printed plastics

- Gate-level design and synthesis tools

- Tiny Tapeout's project to improve hobbyist access to ASIC design