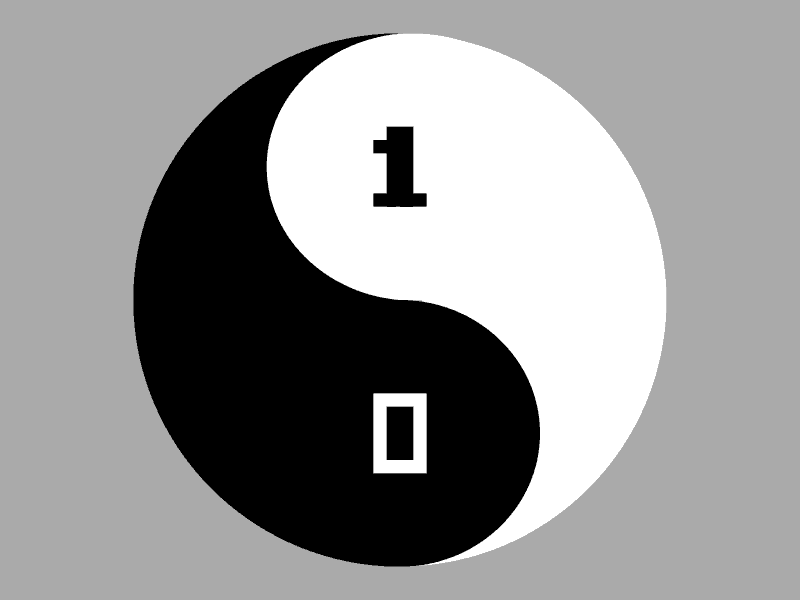

There are certain recurring themes that appear in the world of digital technology. All of these themes ultimately derive from the binary nature of computers; the “flip-flop” between on and off has an unavoidable, cosmological power. It's easy to find this property everywhere when you start looking. Here I list a few — not all of them by any means — that I find interesting.

Binary vs Decimal

We like to work in decimal because it’s conventional to our current mathematics(although historical civilizations have often ended up with different bases for counting). However, binary counting is what is directly represented by digital technology. Therefore, we have converged on numeric standards that use powers of two, which are straightforward to implement. However, we're constantly converting between decimal and binary representations to understand them: as a result, we have problems like the well-known result of "0.1 + 0.2 = 0.30000000000000004" in Javascript: Behind the scenes, binary floating point is being used to represent the numbers, thus we've lost precision in an unintuitive way.

Parallel vs Serial

When describing a signal in binary, we can opt to go “wide” and describe it in terms of a group of values set on and off; or “narrow”, as a sequence of signals on and off over time. This has been the case even from our early uses of symbolic communication. Pictograms encode more within a single symbol than alphabets, which rely on the additional context of a character sequence. The analogy continues into the industrial era with the simultaneous adoption of punchcards and Morse code — a digital record that contains “everything at a glance” versus one that occurs over time.

Time versus Space

When we discuss algorithms and efficient processing of data, there is also a trade-off of space versus time. Sometimes we can store a lot of answers locally and use those to solve problems in one step, while other times we need to "unpack" or "simulate" our way to answers. Sometimes saving space also saves time by reducing the burden of “carrying” around answers. This tradeoff has repeatedly flipped from one type of solution to the other over the history of computing: today many answers prefer "space" because we have a lot of space on the chip, but we face physical limits in increasing the clock speed. Therefore we have gained more memory and more parallelism instead.

Samples vs Instructions

When we describe I/O signals like audio and video, sometimes they occur in terms of a field of regularly spaced samples, and other times they are a sequence of instructions. For example, most of our images are “raster” graphics — pixel samples arranged in rows and columns. But some are “vector” graphics, generated by describing how a line should be drawn or filled in to produce a shape. Analogously, when we discuss text strings, it's usually thinking about characters as the countable elements. But the underlying implementation of text uses an instruction stream, reflecting its historical nature as an evolution of telegraphy into teletype systems. The instruction set enabled two-dimensional control over a typewriter — both the spacing of characters and the vertical movement of the page. In modern Unicode, instructions are additionally used to allow characters to be composited from multiple glyphs, with alternative formatting. Most of the programming hazards associated with text reflect the complexity of this representation, because "understanding" the text is only available in a piecemeal sense.

Linear vs Hierarchical

Examined from one scale, computing can be a “sequence of steps” that is indifferent to the kind of processing being done. From another, computing has a central logic to it — a mechanism that asserts control, and subsidiary mechanisms used to delegate the specifics. For example, assembly languages follow linear steps, but subroutines within assembly code enact a hierarchy. When we write programs with infix syntax, we start with a linear character stream, then convert it to a tree representation. The nature of what is central shifts around at different scales but is a recurring theme even within “decentralized” systems: consensus is maintained by accepting some kind of core protocol or data structure as the ground truth.

Cyclical vs Retained

Many historical mechanisms for information used some kind of revolving mechanism: Physical wheels or discs were a common theme in the past with systems like tape programs or drum memory, and they remain so today with optical discs. Schemes that involve multiple computers often involve some kind of round-robin cycle that passes authority between each computer.

The alternative to that is to have a way of retaining information in a fixed state: with stone carvings, permanent inks, physical connections, latches, pulses of electricity, trusted authorities, etc.

Extension vs Application

The essential dichotomy of high level coding — coding above a specific machine language - can be described in terms of "Lisp vs Fortran".

When Fortran was introduced in the 1950's, it defined the purpose of programming towards a specific application that computers were being used for: to do numeric computations. The language had a syntax that abstracted away the details of "load and store", and it provided some representations of numbers and strings, control flow logic, and use of library code. COBOL, which followed a few years later, did the same for business applications. In effect, you could "fill out a form" and the compiler would check that form and provide some guard rails that helped you towards finishing the application. Both languages are successful and still in use: the application has not changed, therefore they have not needed to change either.

In the 1960's, computer scientists turned their attention towards the idea of extensible programs, spurred on by the progression from the first assembly "autocoders" to the development of Lisp. Extensible programming operates on the principle that a language should be able to be redefined within itself, and therefore become customized to the needs of the individual programmer. Nobody could agree on what Lisp itself was supposed to look like, thus the language turned into a fragmented space, one where many people make their own personal Lisp. Meanwhile, the extensibility researchers attempted to define a language plus a "meta" language, and found it difficult to allow any successive layers: too many details of the implementation drifted upwards into the meta language.

Programming by extension resists standards and broad consensus, thus by the mid-1970's, extension was unfashionable, but it never really died out. The C language retained a preprocessor with macros, a feature that is intimately tied to the language but also considered confusing and dangerous. Forth, which gained some usage in the late 1970's, was, to some extent, motivated by the idea of making the "smallest Lisp", redefining the language in the process to make it easier to bootstrap. Forth remains interesting because it is an exercise in complecting more and more functionality into smaller and smaller space.

The languages of the 1970's and 1980's became less focused on specific fields like "science" or "business", and more interested in large-scale abstraction, code reuse, and error rate reduction. The poster child of this phenomenon was the movement towards object-oriented programming. First articulated in Simula and Smalltalk, the idea of programs as objects living inside a black box and talking through a limited interface was extremely attractive to corporations who wished to maximize code reuse and therefore avoid the expense of new development.

By the late 1990’s, mania for object-oriented had taken hold. The problem was that objects needed to be configured to be reused. To fulfill the promise of reuse, XML, the “extensible markup language”, entered as the glue holding it all together. The sheer number of misguided things that were written with XML is evidence of its effectiveness as a Lisp.

Today we've turned the wheel yet again, and introduced some new languages that make another attempt at the "meta" layer: Rust has macros, Zig has "comptime", and Clojure has merged Lisp into Java, just to name a few.

We are still writing new Fortran code.

In Conclusion

While we are still pretty early in the history of how to best express computations, there are clear patterns to take note of, more than I've listed here. The way in which we talk about computing itself has changed, in part because we have more historical examples, and many of the things that were exciting in research decades ago have had time to be refined. More recent research systems like the VPRI STEPS project demonstrate how sophisticated computing environments don't need "complex" languages: clarity in specifying the application creates the preconditions for ease of expression, low error rates, and good performance. Regardless, we still have a thirst to add configuration to everything, and this motivates the use of extension where it's appropriate, but it creates tremendous pressure to do more computing in every sense — more hardware, more software, more abstract layers.